The DPOInsider covers the latest news and developments in data compliance and privacy. The DPO's favourite weekly read ☕️

Let’s talk about ChatGPT and privacy

The AI tool has undoubtedly taken the world by storm. Within just a few months, it has already hit over 100 million active users.

As you have probably heard, this makes it the fastest-growing consumer application ever launched.

The launch hasn’t been without concerns - and these have been present in a number of different industries.

But what does it mean for privacy?

ChatGPT is ultimately fueled by personal data.

This comes in the form of a large language model that requires very large amounts of data to function and improve.

OpenAI has used over 300 billion words to train its language model. This data has been systematically scraped from the internet - data that includes books, articles, websites and potentially - personal data obtained without consent.

Even this newsletter could be used to train this language model.

I was not asked for consent for this. And this is where the issue lies.

This data is publically available, but this can still breach what we call contextual integrity - a fundamental principle when discussing legal privacy. It requires that information pertaining to an individual is not used outside of the context that it was initially produced.

On top of this, how do we check what data OpenAI stores on us? Those of us familiar with GDPR understand this is a fundamental part of the GDPR - and the jury is still firmly out on whether ChatGPT is compliant with the GDPR at all.

And what about our right to be forgotten? This is an important mechanism for instances when information is misleading - something that ChatGPT has consistently returned to users.

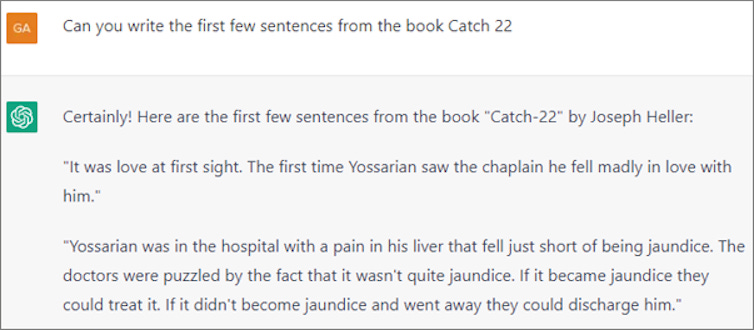

Furthermore, the data that is delivered back to users can be verbatim copyrighted texts:

This all gets a little more suspect when we look at how prompts can be a form of user data.

When we ask the tool to answer questions or perform tasks, we may inadvertently hand over sensitive information and put it in the public domain.

Legal professionals may ask the tool to review a contract or document. Engineers or developers could ask it to review their code - but essentially, this means that valuable IP could now be part of OpenAI’s database.

For privacy professionals (and many other industries) does this mean that ChatGPT and other technologies (Google is releasing its own competitor) are a tipping point for AI, and specifically AI privacy regulation?

These privacy risks should at least be a warning for consumers and legislators. And we should all be more careful about the data that we input into these tools.